What is Quantum Natural Language Processing(QNLP)? Is It Going to Be The Next Big revolution in Artificial Intelligence after LLMs?

(This is the Fourth part of a talk I gave in April 2024 at the world conference on consciousness. Here is the first part titled “Are Humans Really Intelligent?”, second part titled “Are LLMs Really Intelligent?” and the third part titled “Are we at the brink of another AI winter?” )

At the end of last episode, we introduced QNLP as doing natural language processing using Quantum Computers. But we also mentioned that it is not that simple because it is a huge paradigm shift in how we do natural language processing itself.

Before I introduce details of QNLP, I want to go through yet another history of AI, this time from the perspective of a different but very fundamental branch of AI: Computational Linguistics .

Like we mentioned in the last part, AI research has been going through repetitive cycles of explore, exploit and fail in the last half a century. i.e First a boom in AI happens with a new invention or proposal (explore phase), everyone puts all possible resources into it (exploit), then someone proposes a problem the state of the art AI at that time cannot solve, it hits a winter (fail), rinse repeat .

Meanwhile in a parallel world of Computational Linguistics (which of course went on to become the foundation of what we now called modern field of Natural Language Processing), there was a thrust of incorporating grammar (syntax) and meaning (semantics) fundamentally into every attempt to understand human natural language using AI.

For example, linguists have been arguing forever that, if our goal is to create machines which understand human natural language, to understand a given sentence (e.g. I prefer the morning flight through Denver), we must not only provide meanings of each of the individual words like prefer flightetc, but also provide grammatical dependencies also: i.e “I” am the subject who prefers a flight, and morning is a modifier to flight etc etc¹.

And this is how the field of Computational Linguistics and NLP were approaching the problem of how to make machines understand human language since 1950s to early 2000s. However, almost parallel to the AI winters, NLP also soon ran into glass ceilings of their own, in terms of resource limitations. The computers at that time, late 90s and early 2000s, did not have enough resources (memory, compute power etc.,) to incorporate both grammar and meaning. Further, even the ones who could, weren’t able to produce any worthwhile results. So the fields of computational linguistics and natural language processing had almost given up by 2010.

It was at this moment of jeopardy that in 2014, couple of guys from Stanford, proposed this radical idea now called Glove. And their question was: Why are we going bottom up in training machines, i.e by trying to incorporate meaning and grammar, when we can do top down. This infact reflects two major schools of thought in philosophy and linguistics, school of Wittgenstein and School of Firth.

This idea is based on a famous quote by the Linguist John Rupert Firth in 1957 : “You shall know a word by the company it keeps”…or in other words, to understand the meaning of a word, you just need to look at the other words around it. For example, if I just tell you the word Bank, what comes first into your mind? It might be the mental model of Bank as a financial institution. However, what if I tell you the sentence is “I went to the river bank to get some fish”. Then we look at the words around the word bank and nod our heads saying, oh yeah, bank “in this context” means a river bank. So in 2013 when boys at Stanford proposed the top down approach, this is what they meant: why are we worrying about feeding meaning and grammar of a word (e.g. Bank= financial institution), when all we have to do is look at the words around it.

So this discovery/suggestion put an end to AI winter 2, because using this “trick” what is now called bag of words, combined with reintroduction of neural networks (remember perceptron, the fundamental computation unit of neural network was invented in the 1960s), along with a rise in hardware, gave us the AI Boom 3 starting in 2013, which we are now part of in 2024.

So what is this bag of words trick/approach? Say you are given a passage of movie review as below.

First thing current AI models including LLMs do, is break it down into individual words (technical term being tokens), and counting the number of times the words occurs in that passage (a.k.a frequency). Now meaning of each word is defined by the company it keeps. For example meaning of humor, the machine learns, is something that occurs frequently with the words satirical and whimsical.

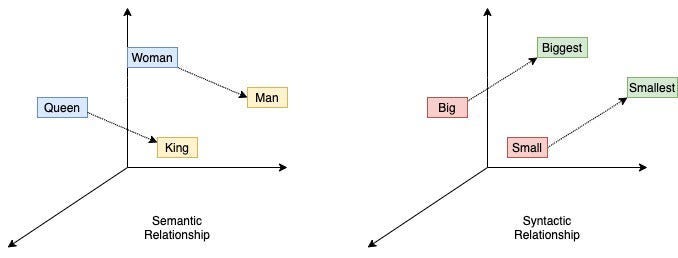

They even showed proofs like when you plot the meanings learned during this approach, the distance between Queen and King was exactly the same as between Woman and Man.- i.e close to human intution/understanding of these words.

But take a step back, and ask to yourself: is the meaning of humor really ONLY defined by the company it keeps/context/words around it? Doesn’t humor itself have an innate meaning. Like Philosopher Ludwig Wittgenstein once stated: “Knowing the meaning of a word can involve knowing many things: to what objects the word refers (if any), whether it is slang or not, what part of speech it is, whether it carries overtones, and if so what kind they are, and so on”. Or if we want to go back to the bank example from earlier, if the machine sees only one definition of bank, i.e that of a financial institution, will it then only learn one meaning?

Or in other words What is the meaning of meaning? Does the meaning of humor or bank is not only defined by the words around it, but there are several layers of overtones including its fundamental definition needed to grasp a full meaning of those words. Left alone combining them hierarchically to form bigger meaning combinations like Sentences, Paragraphs etc. Also remember, this was the initial approach in NLP until the Glove paper in 2014.

In 2020 this is the same question QNLP came in with and asked again (refer History loves repeating patterns from last episode): Why do we need to see all uses of the word Love in the entire world (for which internet, with all its inherent biases and reflections of human society, is sadly considered a exemplary proxy these days ) and contexts, when the human child knows and grows up with a mental model of Love being a psychological feeling. Further, when the child naturally combines and understands the meaning of a new sentence Mom Loves Dad isn’t there a coalescence of mental models of meaning that is creating a bigger mental model in the child’s head. How is an LLM capturing it?

Also QNLP asked another pertinent question: we live in a continuous world and our life is continuous. If everything around us, including our own intelligence is continuous, why are we trying to make sense out of it, left alone make a copy of it, using a discrete tool: A computer made out of 0’s and 1’s. Remember the very first attempt at capturing and codifying a calculating machine known as computer was as a vacuum tube diode in 1904.

Its just that half a century later Transistors showed up as an easy convenience² and then we took of from there? That is in fact the exact point in time we gave up using analog/continuous tools and started using discrete tools for convenience- and vacuum tubes faded away into oblivion³.

Meanwhile QNLP knowingly or unknowingly asks: what if that is fundamental problem that was preventing earlier machines from completely understand the nuances of meaning and recursive combinations thereof, which we call sentences. Give that a thought⁴.

So QNLP- came as a solution, to both of these, and invented the field of Quantum Natural Language Processing. Like I mentioned earlier, everything we do in QNLP is on a Quantum Computer. I will soon write a blog on QBit and quantum computing specific details of QNLP ,but for now, just think of QBit as an infinite dimensional wave, whose two collapsed forms are only 1 and 0. i.e the foundation of our current classical computers, is just 2 of the infinite possibilities that a single QBit can hold inside it. So now imagine what we can do with a ton of such QBits.

Second solution QNLP said was: let’s incorporate both meanings and grammar, bottom up. i.e exactly like a human child learns. Note that the child probably has no idea what grammar is when they are growing up in the initial years, but they still naturally seem to have the ability to naturally combine the given mental models of meanings to make new mental models of meanings. So Bob (Bob Coecke, the amazing physics genius professor at Oxford who is the inventor of QNLP and ZX Calculus amongst many other things) worked with a well known grammarian Joachim Lambek⁷, and finally they figured out that: Human Language And Quantum Physics are very deeply related.

Btw If you are a physicist or a computer scientist or a linguist this is a good research paper to start reading on QNLP. For everyone else K does a sweet introduction to QNLP here.

(If you are someone not from a Physics background, skip this paragraph below. Don’t worry, there is nothing you are losing. Jo Prometo!) The same hierarchy and recursion that human language uses, is exactly like an operator acting on a QBit. For example if I tell you the word Hat, what color hat comes into your mind first? It could be any color right?

Now, what if I tell you that it is a “Black Hat”.

What just happened here in your mind? The infinite dimensional possibilities of colors of Hat, suddenly collapsed into one mental model of Black Hat⁸. Or in other words, the adjective Black, just acted on Hat. Bob and team figured out that this is exactly same as a unitary operator acting on a qbit. From their they just build up the entire language hierarchy using Choi- Jamliokowski- i.e rewriting Operators as higher dimensional QBits. So they start with Noun having 1 QBit, Adjectives having two, (because adjective needs to have one QBit for itself and one for what it is acting on.), so on an so forth. Refer this picture below to see how Red Queen is represented in various forms.

Bottom line is, for the first time in the history of humanity, we now have a continuous tool to measure/make sense of the continuous world around us. i.e a Quantum Computer. Even better, the meager first investigations into this yielded as this mind blowing juxtaposition: Language can be represented exactly as Quantum Physics operations.

Now if you think all this was mind boggling, wait until you hear this. Bob’s actual ace up his sleeve: he proves that a child combining words (and its meaning mental models) together to form a bigger sentence (or a bigger mental model) is exactly same as picking the QBits assigned to each of the word (based on their part of speech as shown earlier), and then casting a magic spell on them. And what is the magic spell? ENTANGLEMENT.

For the uninitiated Entanglement is what even Einstein called a ‘Spooky Action At A distance.’ A very dumbed down version of entanglement is, imagine you have two spoons. You keep one spoons here, on earth on your kitchen table, and send the second spoon with your neighbor Neil Armstrong who is going to the moon. Once Neil makes the giant leap into humanity he calls you up and says, “hey, keep your spoon facing north” . You do it. Then he says from the spoon “Mine is also kept facing north. Now I am going to turn my spoon to face South”. And guess what happens: your spoon here on earth automatically turns all by itself- without you doing anything to it. This phenomenon is called Entanglement. While the obvious caveat is that this has not been done with spoons, but only with very small particles, isn’t that mind blowing? Reminds me of a Quote by Arthur C Clarke: which said “Any sufficiently advanced technology is indistinguishable from magic.”

For the last 100 years humanity has been trying to find an explanation to entanglement, but still couldn’t. Finally we decided, what if it’s not an anomaly and because of which we want to try to understand it, what if it is the fundamental aspect of a brave new world? Or in another words this is the first thing your therapist would tell you: let’s accept the reality that pain exists. Now what can we do with it. Tada! the entire field of Quantum Computing was born circa 2010s.

Coming back to QNLP, Bob and team proposes this amazing idea that Grammar = Entanglement. Or in other words:

Think about this: when you hear the sentence “Alice does not like flowers that Bob gives Claire” — is it just a collection of words for you? Or Is there a meaning and information about Alice flowing from word 1 onwards, which even makes us arrive at the inference that : “hey, maybe Alice is jealous because she has a crush on Bob”.

Hence Bob thought, why not replicate this using QBits. i.e assign meanings to Qbits, gently guide the QBits, through quantum gates to combine them with entanglement⁸.In fact there is a publicly available QNLP tool now called Lambeq⁶, which can convert any English language sentence into a corresponding quantum circuits.

Now you see the parallel progresses and connections humanity has been making in the last few decades right. Right now, on one side we are riding the wave of the 3rd AI Boom, and on another side there is never before seen research and innovation happening in the Quantum World. Thus QNLP is the very first guardian standing at the confluence of these two major rivers trying to hold them both together with its little hands⁹.

Finally you see how it is all coming together right? Here is a question very similar to the path breaking question that was asked last 2 times before the respective AI Winters- i.e Is there a simple problem that LLMs cannot solve? And if yes, what else can? You are looking at it.

And guess what the underlying model I used was when it got this 100% accuracy result with just 100 sentences: yes, A Lambeq based QNLP Model.

Now what is the connection between QNLP and Consciousness? That is explained in the last and final chapter of this talk titled “Is QNLP the first baby step towards creating Machines that are Conscious?” kept here

References:

- you can try creating the grammatical or dependency parsing of a given sentence at : https://corenlp.run/

- Does this remind anyone of the fact that Glove also was invented as a tool for convenience? Hmm, now I wonder if humanity is making the same mistake again and again, sacrificing truth for convenience.

- Vacuum tube based machines are still used in some field, especially Music. If you ask any musician worth his money, he will tell you that a guitar riff played through an Egnater Vacuum Tube amp is sooooo much better than that played through the best say a Marshall Solid state amplifier (which in turn uses Transistors). See, the big analog vs digital divide again here? Music lives in a continuous world, which we tried breaking down using discrete tools. Even Calculus I would say we got off on the wrong foot with. Great we are getting the area under the curve, when the integration tends to infinity, but remember, still it is just an approximation of the real continuous world. Not a copy of it.

- Note that at this point, am not arguing either for or against QNLP, but all I am saying is that, that is a very good point that QNLP is making.

- Which itself was a serendipitous meeting, Bob tells me. Who is saying there is no higher power guiding us to meet right people at the right time? :-D

- Yes, Lambeq is named after the grammarian Joachim Lambek who helped Bob invent this field

- With all due reference and respect to the collapse of the Schrodinger wave equation.

- “One Ring to rule them all, One Ring to find them, One Ring to bring them all and in the darkness bind them.”

- I will even call QNLP the Cape Agulhas of modern world. The exact point where two of the biggest oceans: Indian Ocean and Atlantic Ocean meets.